Docker is a pretty amazing tool.

To prove it, I want to show you how you can build, deploy, and stand-up an N-tier Angular app backed by MongoDB in just three steps. Literally. Without installing any prerequisites other than Docker itself.

First, seeing is believing. Once you have Docker installed (OK, and git, too), type the following commands:

git clone https://github.com/JeremyLikness/usda-microservice.git

cd usda-microservice

docker-compose up

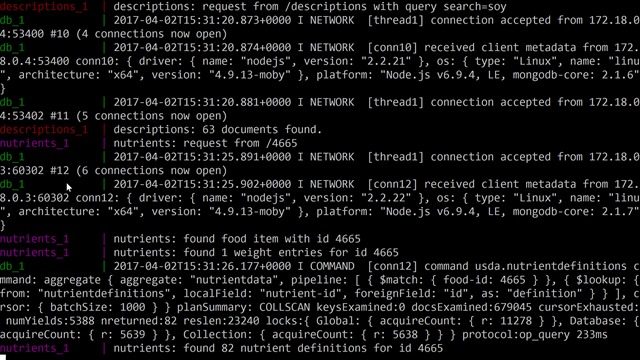

It will take some time for everything to spin up, but once it does you should see several services start in the console. You’ll know the application is ready when you see it has imported the USDA database:

After the import you should be able to navigate to localhost and run the app. Here is an example of searching for the word “soy” and then tapping “info” on one of the results:

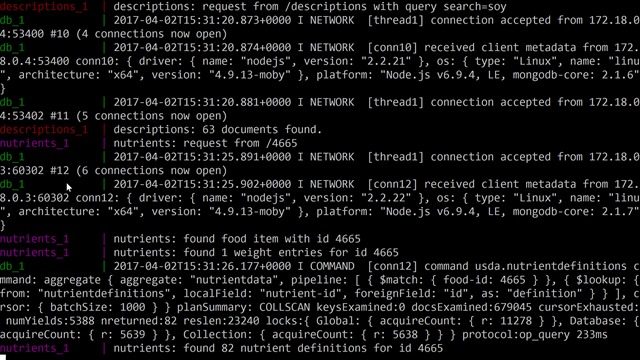

On the console, you can see the queries in action:

How easy was that?

Of course, you might find yourself asking, “What just happened?” To understand how the various steps were orchestrated, it all begins with the docker-compose.yml file.

The file declares a set of services that work together to define the app. Services can depend on each other and often specify an image to use as a baseline for building a container, as well as a Dockerfile to describe how the container is built. Let’s take a look at what’s going on:

Seed

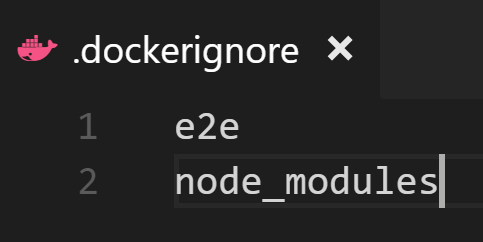

The seed service specifies a Dockerfile named Dockerfile-seed. The entire purpose of this image is to import the USDA data from flat files along with some helper scripts, then expose the data through a volume so that they can be imported into the database. It is based on an existing lightweight Linux container called Ubuntu.

Containers by default are black boxes. You cannot communicate with them and are unable to explore their contents. The volume command exposes a mounting point to share data. The file simply updates the container to the latest version, creates a directory, copies over a script and an archive, then unzips the archive and changes permissions.

db

The db service is the backend database. It inherits from the baseline mongo image that provides a pre-configured and ready-to-run instance of mongodb.

You’ll notice a command is specified to run the shell script seed.sh. This is a bash script that does the following:

- Launch mongodb

- Wait until it is running

- Iterate through the food database files and import them into the database

- Swap to the foreground so it continues running and can be connected to

At this point, Docker created an interim container to stage data, then used that data to populate a mongo database that was created from the public, trusted registry and is now ready for connections and queries.

descriptions

The next container has a directory configured for the build (“./descriptions”), so you can view the Dockerfile in that directory to discern its steps. This is an incredibly simple file. It leverages an image from node that contains a build trigger. This allows the image definition to specify how a derived image can be built.

In this instance, the app is a Node app using micro. The build steps simply copy the contents into the container, run an install to load dependent packages, then commit the image. This leaves you with a container that will run the microservice exposed on port 3000 using node.

Going back to the compose file, there are two more lines called “links” and “ports” respectively. In this configuration, the mongodb container is not available outside of the host or even accessible from other containers. That is because no ports are exposed as part of its definition.

The “link” directive allows the microservice to connect with the container running the database. This creates a secure, internal link – in other words, although the microservice can now see the database, the database is not available to any other containers that aren’t linked and not visible outside of the Docker host.

On the other hand, because this service will be called from an Angular app hosted in a user’s web browser, it must be exposed outside of the host. The “port” directive maps the internal port 3000 to an external port 3000 so the microservice is accessible.

This service exposes two functions: a list of all groups that the user can filter, and a list of descriptions of nutrients based on search text.

nutrients

Nutrients is another microservice that is setup identical to descriptions. It exposes the individual nutrients for a description that was selected. The only difference in configuration is that because it runs on the same port (3000) internally, it is mapped to a new port (3001) externally to avoid a duplicate port conflict.

ngbuild

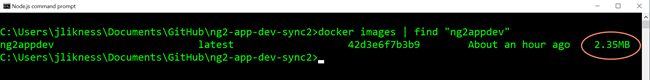

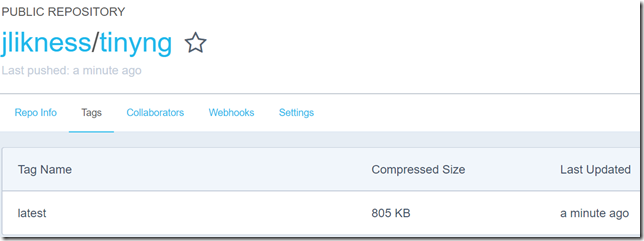

This image points to an Angular app and is used as an interim container to build the app (in production deployments it is more common to have a dedicated build box perform this step). I included this to demonstrate how powerful and flexible containers can be.

Inside the Dockerfile, the script installs node and the node package manager, then the specific version of the angular-cli used to build the app. Once the Angular CLI is installed, a target directory is created. Dependent packages are installed using the node package manager, and the Angular CLI is called to build a production-ready image with ahead-of-time compilation of templates. This produces a highly optimized bundle.

The compose file specifics a volumes directive that names “ng2”. This is a mount point to share storage between containers. The ngbuild service mounts “ng2” to “/src/dist” which is where the build is output.

web

Finally, the web service hosts the Angular app. There is no Dockerfile because it is completely based on an existing nginx image. The “ng2” mount points to “/usr/share/nginx/html” which is where the container serves HTML pages from by default. The “ng2” shared volume connects the output of the build from ngbuild to the input for the web server in web.

This app uses the micro-locator service I created to help locate services in apps. The environment.ts file maps configuration to endpoints. This allows you to specify different end points for debug vs. production builds. In this case the root service is mapped to port 3000, while the nutrients is mapped to the root of 3001.

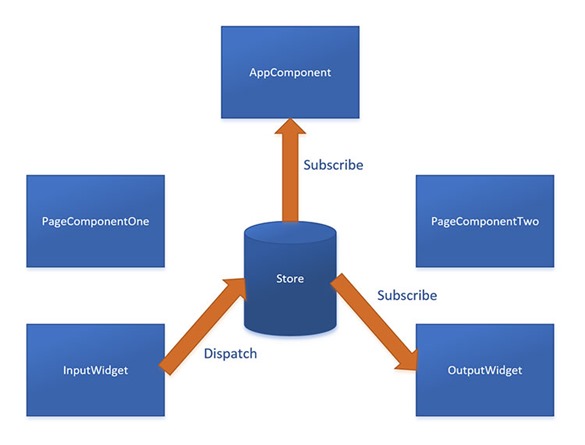

Even though the services are running on different nodes, the micro-locator package allows the code to call a consistent set of endpoints. You can see this in the descriptions component that simply references “/descriptions” and “/groups” and uses the micro-locator service to resolve them in its constructor.

They are mapped to the same service in configuration but if groups was later pulled out to a separate end point, the only thing you would need to change is the configuration of the locator itself. The end code remains the same.

The standard web port 80 is exposed for access, and the service is set to depend on descriptions so it doesn’t get spun up until after the dependent microservices are.

Summary

The purpose of this project is to demonstrate the power and flexibility of containers. Specifically:

- Availability of existing, trusted images to quickly spin up instances of databases or node-based containers, for example

- Least privilege security by only allowing “opt-in” access to services and file systems

- The ability to compose services together and share common resources

- The ease of set up for a development environment

- The ability to build in a “clean” environment without having to install prerequisites on your own machine

Although these features make development and testing much easier, Docker also provides powerful features for managing production environments. These include the ability to scale services out on the fly, and plug into orchestrators like Kubernetes to scale across hosts for zero downtime and to manage controlled roll-outs (and roll-backs) of version changes.

Happy containers!