I’ve heard a lot of complaints about 2016 in general, but for me it was an abundant year. Personally I enjoyed a challenge I gave myself to speak more often than 2015, and believe I succeeded by giving well over one dozen talks on topics ranging from Angular 2 and TypeScript to DevOps.

This year I became a Samsung fan. I traded in my Windows Phone for a Samsung Galaxy 7. I also purchased the Oculus-powered Gear VR and have enjoyed it more than I imagined. I still spend time in VR every week and find the immersive experience far more rewarding than the augmented reality of the HoloLens.

I ditched my Microsoft Band 2 after having to replace it under warranty for the third time. I waited patiently and picked up a Samsung Gear S3.

After a lot of research I decided I didn’t need a hardcore fitness band, but preferred a smartwatch that had great fitness features. The Gear S3 looks sharp:

It has a great battery life (over a day so I can always find time to charge), recharges wireless and fast, and has great functionality. For example, you’d think setting reminders using just your voice or answering the phone through the microphone and speaker in your watch are novelty items, but I’ve done both in practice and it’s helped me out in situations when I didn’t want to pull my phone from my pocket.

The fitness features are phenomenal. It has great detection for steps and flights of stairs, very accurate heart rate (I know because I monitor mine manually quite frequently), auto-detects exercise, and has a running mode that makes it easy to see your lap time, stride, and other stats “on the run.”

Trust me, I didn’t receive any of these items as promotional gear and was surprised to find myself getting so many Samsung products. The most fun I had was with my Gear 360. This boasts two fish-eye lenses with slightly more than 180 degrees of view, so it can simultaneously capture all directions and digitally glue the seams. I purchased it to capture the summits of mountains we climbed over the summer, like our first 14,000 foot tall peak (click the images to scroll in 360 degrees):

…and the grueling half marathon hike up Pike’s Peak:

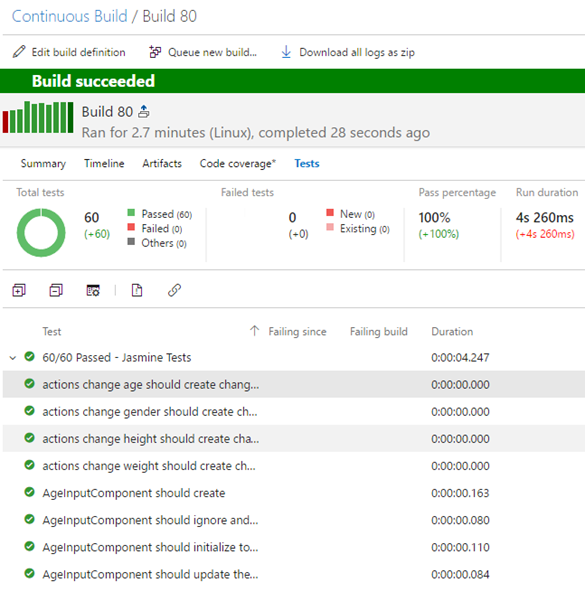

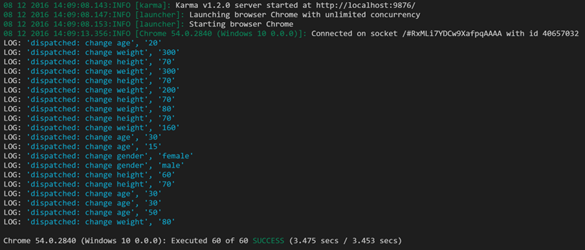

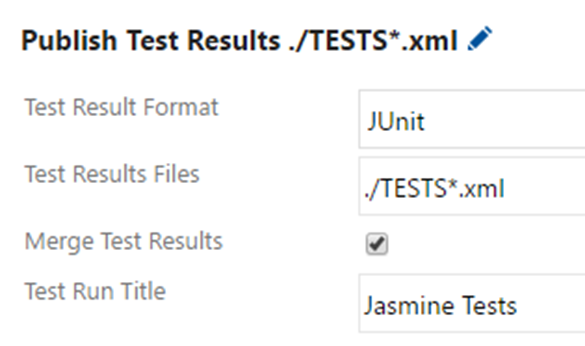

In general I was not surprised to see our project work pick up momentum with Angular 2. I was surprised to see the strides we made with Agile and adoption of DevOps. We were able to automate deployment for several large customers and closed out the year with a strong focus on containers (Docker), mobile (with both Ionic and Xamarin), and mobile DevOps.

The Year of the Blog

This blog should get a new name because I haven’t posted nearly as much content related to C#. Most of my focus has been on front-end, JavaScript and TypeScript development, with a lot of Angular 2, as well as Agile and DevOps concerns. Total sessions and page views are down from last year, but I’m not surprised because I did not post nearly as frequently. Instead, I presented dozens of talks, published several articles, and focused heavily on building the app dev practice at iVision.

Top referrals to my personal blog were from Twitter, Stackoverflow, a previous company I worked for and the DZone.

Demographics remain similar, with a dominantly young, male audience, the majority from the U.S. followed by India, the United Kingdom, Germany, and Russia.

Chrome continues to dominate the browsers that visit my site, edging up to 73% from last year’s 67%. Firefox holds second place at 11% and Internet Explorer holds a close tie with Safari at just around 5% each.

Despite publishing several dozen posts this year, older articles still remain the most viewed. The top three were:

- The Top 5 Mistakes AngularJS Developers Make (but when I give Angular talks, it seems like people are still making them)

- Model-View-ViewModel (MVVM) Explained (that is a 6 year old article – also translated to Spanish!)

- Windows 8 Icons (huh?!)

A goal of mine for 2017 is to be more consistent with this blog and balance my writing between work, this blog, and the various sites I freelance for.

The Year in GitHub

Last year I became really active with GitHub. I’m finally used to and comfortable with git and do so much work with Node.js and other open source projects it was just a logical transition.

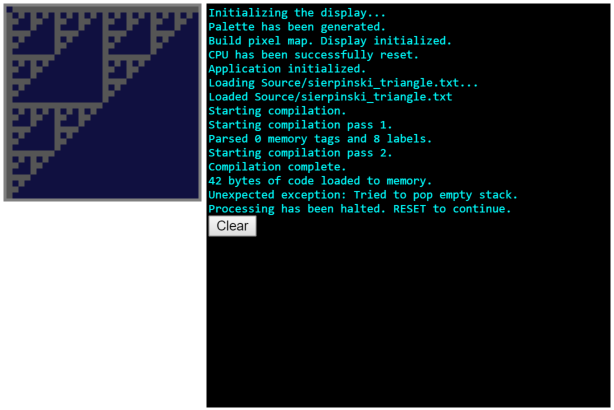

I closed out the previous year explaining how dependency injection works with the jsInject project (I plan to package that for npm soon); rewrote my Angular 1.x Health App in Angular 2, ECMAScript 2015, and with Redux and Kendo UI; ported my 6502 emulator to Angular 2 with TypeScript; wrote a text-based adventure game; created a full day Angular 2 and TypeScript workshop; and recently published a simple microservices locator.

I’m looking forward to many more open source projects in 2017!

The Year in Twitter

This year was interesting on Twitter. I continued to gain steady followers but it was much more “two steps forward, one-and-a-half steps back” than previous years. I’m not sure if it’s due to the breadth of topics, typical attrition, or some other factor, but it has been slower growth.

To date, all of my Twitter growth has been organic. I haven’t applied to any “gain x followers” and do not follow others for a follow back. I try to keep the list of those I follow at just under 1,000 and prune the list based on signal-to-noise ratio, people I genuinely know and people with topics or feeds that I am passionate about.

Most Viewed

With no competition, Angular 2 was the topic on Twitter this year. All top views are related (even RxJS is popular due to its inclusion in the Angular 2 distribution).

Building #Angularjs 2.0 #Angular2 components "on the fly": https://t.co/I83X5uETJD an exmaple with dialogs

— Jeremy Likness (@jeremylikness) November 17, 2016

...and now #Angular2 2.2 is available https://t.co/JTaHT0eiPu #angularjs #ng2

— Jeremy Likness (@jeremylikness) November 16, 2016

A simple guide to debugging #RxJS https://t.co/W1E8h1GojT

— Jeremy Likness (@jeremylikness) December 12, 2016

Most Liked

The most liked followed the most viewed closely with the exception of:

Real-time applications using #ASPNETCore, #SignalR & #AngularJs https://t.co/PhJxAQoHVi

— Jeremy Likness (@jeremylikness) October 10, 2016

Most Clicked

The top clicked tweets align with the top viewed and liked. The fifth most popular was this critique of Angular 2 which many told me "wasn't fair" but I like to post contrasting articles for balance in my feed:

Although I don't agree with the title, #Angular2 developers can learn from this in-depth critique https://t.co/stvD77MGPx Some valid points

— Jeremy Likness (@jeremylikness) December 2, 2016

2017 Predictions

What do I predict for 2017?

Personally, I will continue to speak as often as I can. I love reaching the developer community and all of my talks are based on real world experience. I’ve found it helps to connect when you are able to relate technology in terms of “lessons learned” and case studies “in the real world.”

I hope to continue to grow my knowledge in the DevOps space and believe we will see an exponential increase of containerized (Docker-based) workloads in 2017. With the introduction of .NET Core and SQL Server on Linux, we’re also going to see many organizations shift from traditional Windows-based infrastructure to commodity Linux machines running as VM and Docker hosts. Finally I do believe in 2017 the “native vs. hybrid” debate will fade and it will truly become, “What is your development tool of choice?” as options like NativeScript and Xamarin enable developers to write native mobile apps using the language and development environments of their choice.

My last prediction? I think I’ll be using a lot of Visual Studio Code next year.

I wish you a very Merry Christmas and Happy New Year.

Until next time,