I finally decided to pull the trigger on a new laptop – this one mainly for at home use so a lot more powerful than my portable Lenovo Yoga 13 that I absolutely love. I mentioned before that I wanted to go lightweight for travel, but unfortunately I still haven’t found that ultimate laptop that has all of the specs in one package – i.e. fast SSD, high memory, thin form factor, etc. So, my trade-off will be to have a bigger laptop that I use here and take on the road only when needed, and then keep the lighter Yoga for when I travel or want to be portable around the house. The ASUS VivoTab which has served me well (I use it every day) will go to my daughter and I’ll use the Yoga in its stead.

I’m not much of a do-it yourself type person so I wanted something I could configure completely and order online. I’ve had tremendous success with electronics and yes, even computers, through Amazon.com (I am aware of NewEgg.com and TigerDirect.com, etc.) so I decided to go there. There are two different vendors I’ve worked with through the Amazon site and I’m completely satisfied with both. I received my Lenovo from Eluktronics, Inc.and the TouchSmart came from ULTRA Computers. In both cases I wanted something custom (for the Lenovo it was an i7 when the only listed model was an i5, and for the TouchSmart I wanted a graphics card added to the 500 GB SSD model). In both cases the sellers responded immediately, set up a SKU to satisfy my request, then built and shipped it immediately. Couldn’t be more pleased. I can’t recall the return policy from Eluktronics but ULTRA Computers also offered me a 60-day return policy.

Configuration

To be completely honest HP is not my first choice for a vendor – I’ve had a lot of success with ASUS, Dell, and Samsung products, and of course there is the MacBook series that can’t be ignored. However, after doing extensive research the only configuration I could come up with was through the HP product. Here’s the hardware specs I ended up with:

Intel Core i7-4700MQ (2.4 GHz, 6 MB cache, 4 cores with 8 logical) – turbo boost up to 3.2 GHz

Intel HD Graphics 4600 on the board but added the NVidia GeForce GT 740 with 2 GB dedicated memory

16 GB DDR 3 RAM

512 GB Samsung 840 SSD

RealTek 10/10/1000 Gigabit Ethernet LAN

Intel 802.11 b/g/n WLAN (does NOT appear to be dual-band), WiDi

4x 3.0 USB

1 full-size HDMI

1 RJ45

1 headphone/microphone combo

Multi-format card reader

15.6” diagonal LED-backlit touch screen 1080p

Full-size island keyboard with number pad

HP TrueVision HD WebCam

Integrated Dual Array Microphone

Beats Audio w/ 2 subwoofers, 4 speakers

Unboxing

Unlike the ASUS and Lenovo, the HP packaging was direct and to the point – not much invested in the presentation, but for me I could really care less. I want the goods. So here are the goods, sans boxes and packaging foam:

From the top:

Note the reflective sticker (you can see me in the reflection). I’ll share the first con here: build quality. Although this will work fine for me, some will expect a more expensive laptop to be solid throughout. The keyboard and bottom feel like solid aluminum, but tapping on the lid gives the impression it is plastic painted to look like metal. I’m not sure if it is, but it certainly gives that sound and feel. It’s a little like a cheaper Acer laptop I used to own – you can press hard on the lid and see artifacts on the display on the inside. Some people will run the other way because of this, but I’ve had plenty of laptops built this way and haven’t had an issue so it’s not a deal breaker for me.

Even though this laptop is larger (15”) and heavier (5+ lbs.) it feels nothing like the thick, brick-like Dell I had before. When just reading specs I was concerned, but upon receiving it, my fears were unfounded. There is a nice taper that despite the power it is packing gives it a slim face:

When facing the laptop, the left side has a security cable slot, vents, HDMI, USB 3.0 charging port, USB 3.0 port, digital card reader, and hard drive and power LEDs. This angle demonstrates the taper so you can see the tilt of the keyboard:

The right side features the dual audio out/in (microphone and headphones), 2x USB 3.0 ports, Ethernet status lights, full size RJ-45 jack, AC adapter light and the connector for the adapter.

Windows 8 and Support Woes

The second con was not really HP’s problem until I contacted support and discovered they were completely incapable of advanced troubleshooting. In the middle of installing my development environment, I did a reboot and suddenly found no Windows Store apps would launch. Apparently this is a widespread issue because a few searches found a lot of frustrated people who found their system suddenly stopped allowing them to launch Windows Store apps. The common things (latest display driver, ensuring more than 1024 x 768 resolution, etc.) were already addressed on my machine. Another common tact is to uninstall, then reinstall the apps – but my challenge was the Windows Store app itself wouldn’t launch (even after clearing the cache). I tried the system refresh and that failed. HP Support was clueless and obviously following a script, so I ended up just reinstalling Windows 8.

This was a little challenging as well. After installing it (happens FAST due to the SSD) I went onto the HP site and asked it to identify my system. It identified the wrong system so when I installed the Intel Chipset driver it froze my system. That meant a wipe and reinstall.

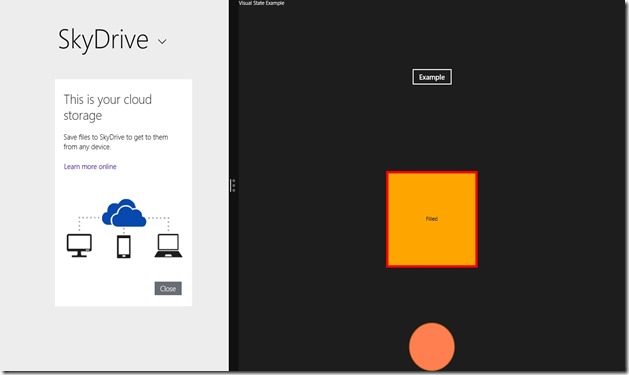

This time I specifically selected the model I knew it was, and the drivers worked fine. Once I got through that step the system worked well (even better without the bloatware on it) and I was very satisfied. A major “pro” with Windows 8 is the profile synchronization. After installing the OS, most of my tiles “went live” with data. All of the Windows 8 apps remembered my settings and just started working. I was surprised by how much actually synchronizes – for example, I went to the command prompt and it was configured exactly the way I like it (I make it use a huge font so it’s easy to present with).

Keyboard and Number Pad

The larger form factor gave HP room to provide a generous keyboard.

The keys are laid out nicely. I love the “island style” (keys are recessed so the lid closes flush without contacting them) and the keys have good travel. Having a full-sized number pad is also great. So how is the keyboard over all? My response here will be a bit loaded, so bear with me. If I hadn’t purchased a Lenovo Yoga I would say this is a great keyboard – one of the best I’ve typed on. I have read that some people find it loses key presses but that has not been an issue for me. It feels natural, I’m able to type extremely fast and don’t feel cramped. However, since I have used a Lenovo I can say it is still inferior to the Yoga keyboard – I still believe Lenovo makes the best keyboards in the industry. Anytime I feel the typing experience is great on the HP, I go over to my Yoga and suddenly it just feels better – the keys feel more solid, and the keyboard is a lot quieter than the HP.

The keyboard follows the ultrabook trend of wiring the function keys to actual functions rather than the function keys themselves, so a developer has to remember to hold down Fn + F5 when debugging for example. This can be turned off in the BIOS. The keyboard has substantial flex. When you press keys on the left side you can definitely see all of the keys around it moving. This is a deal breaker for some people. It doesn’t bother me and I wouldn’t have noticed it if I didn’t know to look for it but it is something to keep in mind (I suggest trying out a model if it’s a concern). The arrow keys are horrible – the up and down take up the space of a single key and I’m constantly missing the right one when I try to travel. I’ll need to retrain myself to use the arrows on the number pad instead. The page up/down/home/end keys are horizontal on the upper right while the Lenovo has them vertical so I am having to retrain myself there.

Touch Pad

I was pleasantly surprised by the touch pad. I’ve given up my mouse completely so I now exclusively use the touch pad, therefore it is important to me that it works well, is responsive and handles gestures for pinch, zoom, rotate, and scrolling. When I first started using the laptop I did not like the rough texture – the Yoga is glass and smooth so this felt a little odd to me. I had no issue with no separate left/right click buttons because that is how the Yoga is configured and I’m used to it. What is interesting is now that I’ve used it a couple of days, I’m more used to it now and the Yoga feels “slippery” with the glass. I guess it’s a question of what you use more.

The pad itself is incredibly responsive. I had read some concerns over quality but I haven’t found anything other than a glitch where two finger scrolling stopped working once. I had to reboot and it magically came back and hasn’t repeated itself but something to keep in mind. The Lenovo machines also suffered from these types of issues in the early days before the drivers stabilized. I use different gestures including Windows 8 app and charm bars and these all work flawlessly. Some people commented on the touch pad being off center. If you look at the image, while it is off center relative to the frame of the laptop, it is actually centered on the keyboard itself which is what is important and feels well-placed to me.

Wrist detection is fine and I have had no issues with accidently brushing the touch pad and having the cursor jump when typing. I also haven’t experienced the converse: i.e. the touch becoming unresponsive because a portion of my finger is resting on it. However, I must also add I try to type ergonomically which means I don’t rest my wrists on the keyboard – they brush it but I do keep them elevated.

This entire blog post was composed on the new laptop.

Display

The model I have comes with a 1080p touch display. The display itself is an interesting combination of pros and cons. So, first the cons. Again, I’m spoiled by the Yoga because it has a beautiful, clear display with incredible viewing angles. It is bright and readable from almost any angle and while it is not full matte so I do get some reflections, they don’t interfere with every day use. The HP display on the other hand is extremely glossy. I purposely took a picture that shows my reflection as well as a strange line of “light” across the right side which is the reflected sunlight through the edge of some blinds that are behind me. It is very reflective, more so than the Yoga, so I don’t imagine it would do well on a deck with sunshine. Fortunately, I typically have it set up in my office with no sunlight so it’s not a deal breaker but again something to look at.

The viewing angles are also limited. Vertical is not so bad (you can see from straight on to down, but don’t try to look “up” from the bottom or it will wash out) but horizontal is a very narrow range. I guess optimistically you could say it has built in privacy protection. Some people are very passionate about their viewing angles and I thought it would be a problem but honestly after several days of use I haven’t noticed them. I’ve used it both on my desk, on a table in the office and on my lap and it works fine. When viewed straight on, it is very bright and clear.

That segues into the pros: the display is very crisp and clear. I don’t even bump the font size despite it being 1080p because I love my large workspace and can read even 8pt fonts fine. I used a monitor calibration tool and it did very well across the board (again, provided I am in the right viewing window). For what I do – heavy development and writing – it is perfect. Honestly I feel like I fooled myself into settling for the Yoga’s 720p display because it is just so much more functional for me to have the full 1080p. When you are in the optimal viewing angles the display is superb, it only loses that quality when you are viewing at extreme angles which typically wouldn’t be the case anyway. Although the picture above shows the glossiness of the display, I worked for a full day in that same environment with no issues or distractions because it is clear and bright when viewed straight on.

Fingerprint Scanner

How cool is that? I thought this would be a more “nice to have” and gives someone bragging rights (I have Mission Impossible on my laptop) but now I’m going to be spoiled even more. It turns out despite the possible added security (and I know fingerprint readers have been around for quite some time) it is convenience that wins out. As a corporate computer it is constantly locked when I step away, and being able to unlock and log in with a simple swipe on my index finger is quite nice.

Audio

I didn’t really factor audio into my purchase decision at all. This is because I typically use headphones and microphones so the built-in is just a bonus. The model comes with Beats Audio which I assumed was sort of a gimmick – OK, great, two sub woofers and four speakers, wow (twirl finger in the air). Upon receiving it … WOW! The audio is fantastic. In fact, I switched from using Skype with headphones to using the speakers (I work from home, so no one to distract – if my daughter is on one of her classes in the next room I’ll go back to headphones for that). The sound is really, really clear and has a great range despite coming from a laptop. I am really impressed with how they engineered the audio. I played some Netflix with the audio jacked up all of the way and it sounded fantastic, including the lower ends. I can see this as an easy “set it on the table and watch videos without headphones” laptop.

The built-in microphones are great as well. There is an array on either side of the web came and I’m assuming it uses those for noise cancellation, etc. I know on Skype with the speakers blasting out and me talking, the other attendees told me there was absolutely no echo and my voice came through crystal clear. So checkbox for remote team collaboration with this laptop (Skype, GTM, and WebEx now fully tested).

Backlit Keyboard

The backlit keyboard was another thing I wrote off as a “senseless perk” and never paid much attention to. It’s an option on this but mine was fully loaded so they threw it in for me and … I like it! In fact, I love it. I didn’t realize how often I’d come into the office in the dark and not want to turn on a bright light but would fumble around on the keyboard. My testing was rebuilding it while watching The Hobbit with my daughter. Lights were off for full movie effect and the backlight allowed me to tap in commands occasionally when needed. I saw one review that complained about the bleed around the keys but it’s really only evident when you are looking at the keyboard from the front (when would you do that?) From a normal position it is perfectly fine. Here’s a snapshot of the keyboard with the lights on:

The only forehead slap with this is the way you turn it on and off. There is a function key wired to do this (see F5 in the image), but although the wireless (airplane mode, F12) key has it’s own little indicator for when wireless is on or off, the backlight has no indicator. If I were to engineer this keyboard, I’d have a little light on the backlight function key so you can find it in the dark to turn on the keyboard. As it is you have to fumble around or tilt the screen to find it, THEN once it’s on the keys are all illuminated.

Performance and Compatibility

So here is where it gets really exciting. The performance of this laptop is outstanding. It features the latest Intel 4th generation “Haswell” chips, a great integrated video card (not one for extreme gamers but fine for a discrete GPU on a development machine) and a blazing fast SSD. The SSD is the Samsung 840 series and it whirs. My “non-standard” benchmarks were taking publication of a database that includes populating tens of thousands of sample records from 3 – 4 minutes on my Lenovo Yoga to under one minute on this machine. Builds go from 5 – 7 minutes for a 40+ project solution down to 2 – 3 minutes. Installing office (yes, ALL of Office) took about 5 minutes. Visual Studio took longer to download than to install. Bottom line: it performs well.

I had no issues for drivers for anything I plugged into it – my Targus USB was recognized immediately, and so was the Lenovo ThinkVision mobile I use when on the road. My headphones connect over BlueTooth without any problems.

I’m sure you can look up various benchmarks and other performance specs online but here’s the Windows Experience Index from my laptop to give you an idea of how it does:

The Intel 4600 built-in GPU is what kicks the overall score down. You can see the NVidia is slightly better and then everything else is great. The SSD really makes a difference – it’s almost twice as fast as the one in my Yoga. When I go onsite and power on my laptop, it’s literally turn it on, wait a few seconds, and swipe my index finger. I’m there and ready to go.

Battery

This is something I haven’t tested extensively. My brief test involved switching to the integrated graphics card then watching full screen video. I used the Beats Audio for a portion and then switched to head phones. After 3 hours the battery showed about 45 minutes remaining but the estimates seemed to consistently run low so I’m guessing I would have had another few hours. It’s enough to power the laptop through a flight from Atlanta to Seattle, so that works for me.

Conclusion

I had some trepidation when I made the purchase. I had read some negative reviews (the computer is overall rated very high on HP’s own site and Amazon) and had a bad experience with the Windows Store issue, but once I worked through that and used it, I definitely think it’s a keeper. If you don’t need the memory and 4th generation processors then I’d suggest going for a Yoga or Samsung Chronos (and if you can wait, Samsung will likely come out with more powerful configurations) but otherwise this is the best performance I could find that still maintains a good form factor despite the size. The only two things I’d change would be better viewing angles on the display and a Lenovo keyboard, but until Lenovo gives me an option with the 4th generation processors that has 16 GB of RAM and is SSD equipped, I’m sticking with this one. This is definitely the development workhorse I was hoping for. I’ll give my Asus to my daughter, and my Yoga will become my “convenience” laptop that I keep downstairs for social media and quick updates or watching videos while I keep this one plugged into my Targus USB 3.0 docking station so I get a full three monitors.